In an early July blog post on the evolution of the Robots Exclusion Protocol (REP), Google made two big announcements: moving the REP towards “Internet Standard” status and open sourcing the robots.txt parser

For SEO practitioners, these are big developments. Google’s move will provide new insight into how the Googlebot interprets robots.txt files, but it also means that some enterprise websites may require tweaks to ensure they are ready and optimized for the new standards.

What is Robots Exclusion Protocol or REP?

The Robots Exclusion Protocol (REP) is a conglomerate of standards that regulate how websites communicate with web crawlers and other web robots. REP has been a basic component of the web for decades and it specifies how to inform the Google bot (or any other web robot) about which areas of the website it is allowed to process or scan, and which are out-of-bounds.

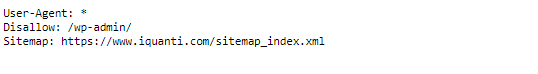

Robots.txt is a file that webmasters create to specify to the search bots what they can and cannot crawl on their website, and how they are expected to treat page-, subdirectory- or site-wide content and links.

In other words, robots.txt allows website owners to disable web crawlers from accessing their sites, either partially or entirely. Thanks to Robots Exclusion Protocol, site owners can tell search bots not to crawl certain web pages: e.g., content that is kept gated or meant for internal company use only.

Why is Google Open Sourcing robots.txt?

The /robots.txt is a de-facto standard – which means that it is not owned by any standards body. Since the publication of the original A Standard for Robot Exclusion in 1994, robots.txt has been widely used across the web. But despite its ubiquity, the REP never graduated to an officially recognized Internet Standard – that is, a technical standard certified by the Internet Engineering Task Force (IETF). As a result, developers have interpreted the protocol somewhat differently over the years.

Also, the REP hasn’t been meaningfully updated since it was first introduced. This posed a challenge for webmasters, who struggled to write robots.txt rules correctly.

Google has announced that open sourcing its robots.txt parser is “a part of its efforts to standardize REP”, and starting on 1 September 2019, Google and other search engines will drop their support for non-standard rules – such as nofollow, noindex and crawl-delay – in robots.txt. In its announcement about formalizing the REP specification, Google clearly states that the new & revised REP draft submitted to the IETF “doesn’t change the rules created in 1994, but rather defines essentially all undefined scenarios for robots.txt parsing and matching, and extends it for the modern web.”

Access GitHub here.

Most notable changes to robots.txt (and how to get ready for them)

Below, I have listed three most significant rules and features of REP that are being sunsetted. I have also mentioned alternate implementation ideas, where available.

1. Crawl-delay

The crawl-delay directive is an unofficial directive used to prevent overloading servers with too many requests.

If search engine crawls can overload your server, you may need to re-evaluate your hosting environment and make fixes. In short, if your website is running on a poor hosting environment, you must fix that as soon as possible.

Alternate Implementation:

No alternative available. Upgrade your hosting solution if page loads are slow or if search engine crawls are hurting performance.

2. Nofollow

The nofollow directive tells search crawlers not to pass SEO authority from one page to another.

Paid content may be tagged as nofollow to keep the hosting domain from being hit with an SEO penalty.

Alternate Implementation:

Implement nofollow at the page level via meta tags, or on a link-by-link basis.

3. Noindex

The noindex directive instructs crawlers not to index certain content.

It’s useful for legal or administrative pages or “thin content” such as thank-you pages – as well as anything Google might consider as duplicative content.

Alternate Implementation:

- Use the “disallow” command in robots.txt

- Implement noindex in page-level meta tags

- Display 404 and 410 HTTP status codes (where applicable)

- Apply password protection for the pages you don’t want indexed

- Remove the URL or URLs via the Google Search Console

Other changes to robots.txt

Though not as significant and impactful as the three major features/rules listed above, there are additional specification changes for Googlebot robots.txt that SEOs and webmasters need to be aware of.

Several of these include:

- Googlebot will follow 5 redirect hops as a standard.

- Google will consider there to be no crawl restrictions, if robots.txt is unavailable for more than 30 days.

- If the robots.txt is unreachable for more than 30 days, the last cached copy of the robots.txt will be used.

- If a robots.txt file was previously available, but is currently inaccessible (due to server failures etc.), the disallowed pages will continue to be not crawled for a substantial period of time.

- Google treats unsuccessful requests or incomplete data as a server error

- Google now enforces a size limit of 500 kibibytes (KiB), and ignores any content after that limit – thus lessening the strain on servers.

- Robots.txt now accepts all URI-based protocols, which means it is no longer not limited to HTTP anymore and can be used for FTP or CoAP as well.

- The new maximum caching time is 24 hours (or cache directive value as specified). This means that webmasters can update their robots.txt file at any time.

At iQuanti, we are closely monotoring these developments. We are running a critical analysis of robots.txt files of all our enterprise clients to understand and assess impact of these changes – and implement changes to stay ahead of the curve.

As webmasters, you should have complete control over how search bots view your website and you need to ensure the new changes are not hurting your SEO efforts. If you are looking to run an impact analysis on your own website – and we think you should – keep checking this space for our observations and learnings. We would be adding our ‘robots.txt parsing changes checklist’ here soon too.

Have any questions, or anything to add here? Please write to us now.

Learn more about iQuanti’s Search Engine Optimization Services here.